Biography

Xintao Wang (王鑫涛) is a fourth year Ph.D candidate at Fudan University in the School of Computer Science. He is deeply fascinated with ACG (Anime, Comics & Games) culture, and is devoted to revolutionizing the ACG industry with AI techniques. Hence, his research interests primarily focus on human-like generative agents and their personas, including (but not limited to):

-

Role-Playing Language Agents: Targeting at creating AI agents that faithfully represent specific personas, including: (1) Accurate and nuanced evaluation of LLMs’ role-playing abilities; (2) Construction of high-quality persona datasets from established fictions; (3) Development of foundation models for advanced role-playing abilities; (4) Agent frameworks and applications for role-playing, such as multi-agent systems for creative storytelling.

-

Cognitive Modeling in Language Models: Focusing on integrating anthropomorphic cognition into LLMs, such as ego-awareness, social intelligence, personalities, etc. The goal is to promote LLMs’ understanding of the inner world of themselves and others, hence enabling them to generate more cognitively-aligned and human-like responses.

- Large Language Models

- Autonomous Agents

- Role-Playing Language Agents

- ACG, J Pop, Cosplay

-

Ph.D in NLP, 2021 - 2026 (estimated)

Fudan University

-

B.S in CS, 2017 - 2021

Fudan University

News

- Apr. 2025: Check out BookWorld! In BookWorld, we create multi-agent societies for characters from fictional books, enabling them to engage in dynamic interactions that transcend their original narratives, thereby crafting innovative storytelling.

- Feb. 2025: 🔔We are thrilled to introduce CoSER: Coordinating LLM-Based Persona Simulation of Established Roles, a collection of high-quality authentic dataset, open state-of-the-art models, and nuanced evaluation protocol for role-playing LLMs.

- Feb. 2025: 🔔Check out PowerAttention, a novel sparse attention design with exponentially-growing receptive field in Transformer architecture, facilitating effective and complete context extension. a collection of high-quality authentic dataset, open state-of-the-art models, and nuanced evaluation protocol for role-playing LLMs.

- Oct. 2024: 🔔The first Survey on Role-Playing Agents has been accepted to TMLR!

- Sept. 2024: 🔔Our Paper Evaluating Character Understanding of Large Language Models via Character Profiling from Fictional Works got accepted to EMNLP 2024, and Capturing Minds, Not Just Words: Enhancing Role-Playing Language Models with Personality-Indicative Data got accepted to EMNLP 2024 Findings!

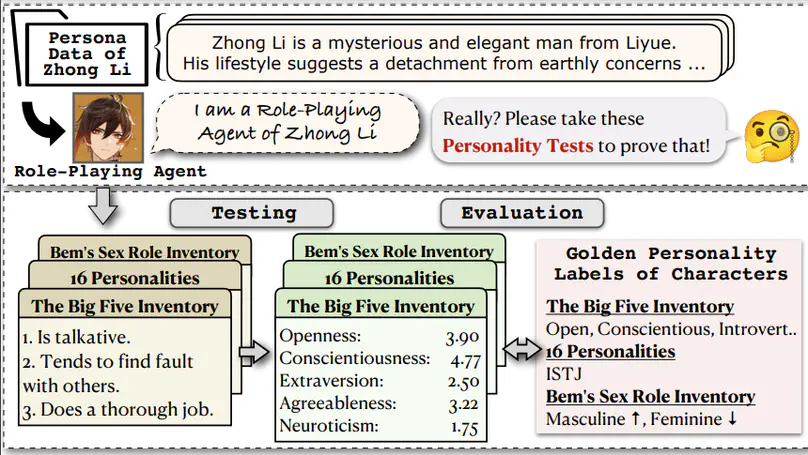

- Aug. 2024: 🇹🇭Attending ACL 2024@Bangkok! 🧙 I will present InCharacter while cosplaying as the iconic character Zhong Li from Genshin Impact!

- May. 2024: 🔔Our InCharacter got accepted to ACL 2024, and Light Up the Shadows got accepted to ACL 2024 Findings!

- Apr. 2024: InCharacter will be presented in the poster session in Agent Workshop @ Carnegie Mellon University!

- Apr. 2024: The first Survey on Role-Playing Agents is out! Dive into our comprehensive survey of RPLA technologies, their applications, and the exciting potential for human-AI coexistence. Understanding role-playing paves the way for both personalized assistants and multi-agent society. Check our latest survey on role-playing agent!

- Apr. 2024: Check out SurveyAgent! This system stands out by offering a unified platform that supports researchers through various stages of their literature review process, facilitated by a conversational interface that prioritizes user interaction and personalization! Access via homepage and have fun!

- Mar. 2024: As a member of Takway.AI, I’m thrilled to announce that we secured the Second Prize in the InternLM Competitions, hosted by the Shanghai Artificial Intelligence Laboratory!

- Feb. 2024: Check out InCharacter! Self-assessments on RPAs are inherently flawed - which heavily depends on LLM’s own understanding of Personality. Instead, our work revolves around interviewing characters in 14 different psychological scales, providing a more objective description of LLM’s role play abilities. Check out this project demo!

Experience

Awards

Featured Publications

“Now we know that we are living our lives in a book.”

“If what you say is true, I’m going to run away from the book and go my own way.”

In BookWorld, we create multi-agent societies for characters from fictional books, enabling them to engage in dynamic interactions that transcend their original narratives, thereby crafting innovative storytelling.

In this paper, we present CoSER, a collection of a high-quality dataset, open models, and an evaluation protocol towards effective RPLAs of established characters. The CoSER dataset covers 17,966 characters from 771 renowned books, providing authentic dialogues with real-world intricacies & diverse data types such as conversation setups, character experiences and internal thoughts. Drawing from acting methodology, we introduce given-circumstance acting for training and evaluating role-playing LLMs, where LLMs sequentially portray multiple characters in book scenes. We release CoSER 8B and CoSER 70B, i.e., state-of-the-art open role-playing LLMs built on LLaMA-3.1.

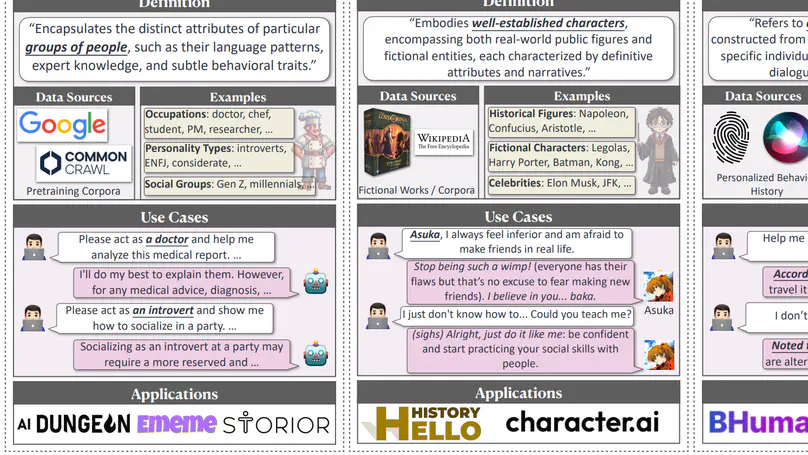

We present a comprehensive survey on role-playing language agents (RPLAs), i.e., specialized AI systems simulating assigned personas. Specifically, we distinguish personas in RPLAs into three progressive tiers - demographic persona, character persona, and individualized persona. Our survey discusses their data sourcing, agent construction, evaluation, applications, risks, limitations and future prospects.

Do role-playing language agents accurately capture character personalities? Towards this question, we propose InCharacter that evaluates their personality fidelity via psychological interviews using 14 scales.

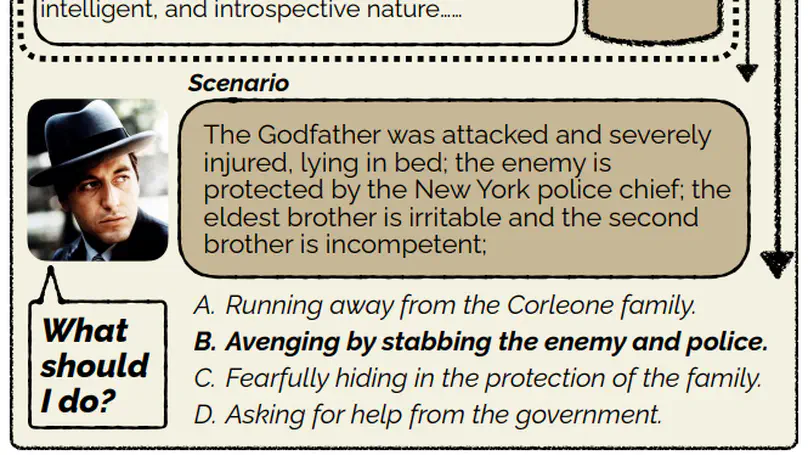

Can Large Language Models substitute humans in making important decisions? Recent research has unveiled the potential of LLMs to role-play assigned personas, mimicking their knowledge and linguistic habits. However, imitative decision-making requires a more nuanced understanding of personas. In this paper, we benchmark the ability of LLMs in persona-driven decision-making. Specifically, we investigate whether LLMs can predict characters’ decisions provided with the preceding stories in high-quality novels. Leveraging character analyses written by literary experts, we construct a dataset LIFECHOICE comprising 1,401 character decision points from 395 books. Then, we conduct comprehensive experiments on LIFECHOICE, with various LLMs and methods for LLM role-playing. The results demonstrate that state-of-the-art LLMs exhibit promising capabilities in this task, yet there is substantial room for improvement. Hence, we further propose the CHARMAP method, which achieves a 6.01% increase in accuracy via persona-based memory retrieval. We will make our datasets and code publicly available.