Knowledgpt: Enhancing large language models with retrieval and storage access on knowledge bases

Image credit: Unsplash

Image credit: Unsplash

Abstract

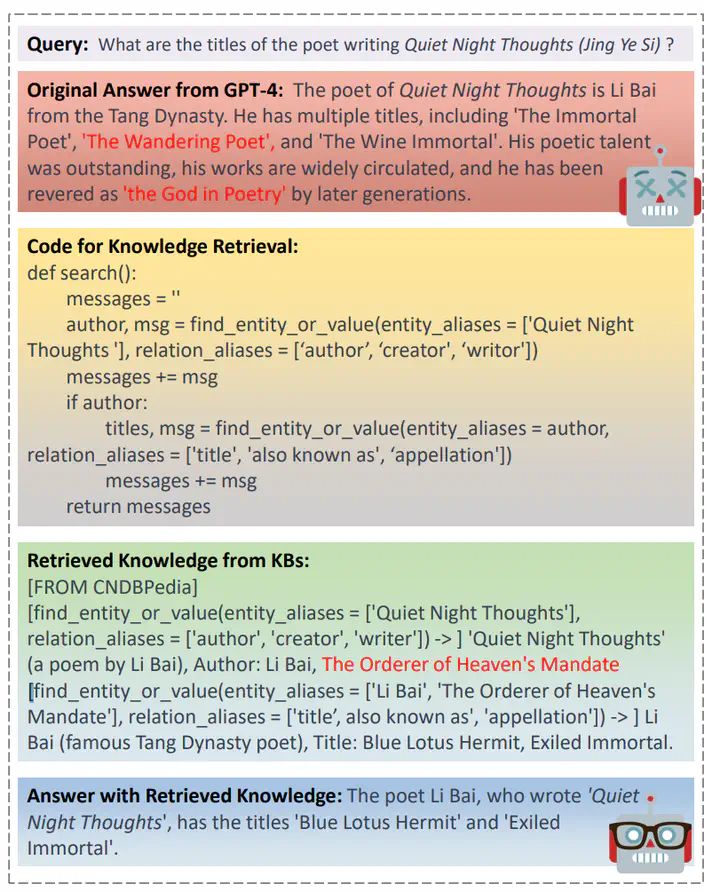

Large language models (LLMs) have demonstrated impressive impact in the field of natural language processing, but they still struggle with several issues regarding, such as completeness, timeliness, faithfulness and adaptability. While recent efforts have focuses on connecting LLMs with external knowledge sources, the integration of knowledge bases (KBs) remains understudied and faces several challenges. In this paper, we introduce KnowledGPT, a comprehensive framework to bridge LLMs with various knowledge bases, facilitating both the retrieval and storage of knowledge. The retrieval process employs the program of thought prompting, which generates search language for KBs in code format with pre-defined functions for KB operations. Besides retrieval, KnowledGPT offers the capability to store knowledge in a personalized KB, catering to individual user demands. With extensive experiments, we show that by integrating LLMs with KBs, KnowledGPT properly answers a broader range of questions requiring world knowledge compared with vanilla LLMs, utilizing both knowledge existing in widely-known KBs and extracted into personalized KBs.

Add the publication’s full text or supplementary notes here. You can use rich formatting such as including code, math, and images.